A/B split testing is a great feature of email automation allowing you to test variations in design or content to determine the most effective for your audience. When applied to a subsection of your target contacts, the most successful variation can then be deployed across your entire list, ensuring a better conversion rate. Here’s a case study of how we’ve used it recently…

Background

Our client wanted to implement a partner registration welcome track of emails running at timed intervals over several weeks to their global list of new partners. This equated to over 20 language variants for 7 to 8 emails, culminating in over a 150 emails – a significant amount to design, build and test. Taking into account the scale and effort involved, it was decided to first test 2 different layouts to English speaking regions, and then use the results to maximise the engagement of the remaining database.

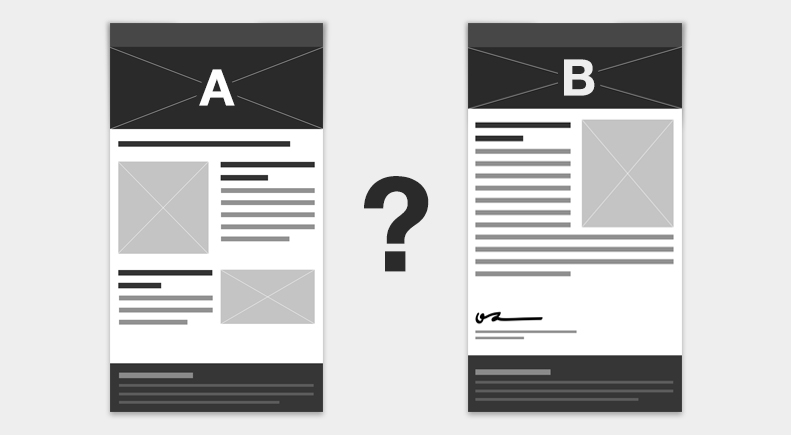

A/B Split

The 2 A/B styles tested were an email newsletter template (illustrated A) and a more traditional written letter (illustrated B). These were deployed to only 15% of the total list. We ran this test for a few weeks to gain enough data to determine which style proved more opened, more read (thanks Litmus) and more clicked (thanks Eloqua). We used this intelligence to inform which of the styles to employ for the remaining 85% of the sends.

Results

For our audience (a welcome introductory engagement) the letter style (B) proved 2 times more opened, read and clicked. It seemed that since they were being welcomed to a partner program the more personable, concise style was more effective than the more standard template and generated the greater uptake. The letter style was therefore used for the remaining 85% of the sends, helping to produce a more engaged audience and overall more successful campaign.